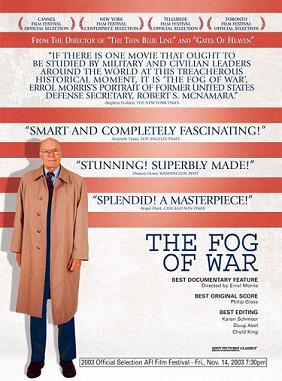

(The Fog of War movie poster with Robert S. McNamara. Source: Wikipedia)

(The Fog of War movie poster with Robert S. McNamara. Source: Wikipedia)

Errol Morris’ 2003 documentary, The Fog of War: Eleven Lessons from the Life of Robert S. McNamara, is above all a film on the limits of human understanding. It is about the reflections of a supreme rationalist on the irrational forces that have shaped his life.

Robert S. McNamara is a man who reached the commanding heights of the world economy, military, and politics. He was the president of the Ford Motor Company, one of the longest-serving secretaries of defense, and had a 12-year tenure as the president of the World Bank. And in everything he did, beginning in his formative youthful years, he applied scientific precision and analysis; indeed, he was management science and an operations research pioneer.

A graduate from Berkeley’s economics department, McNamara went straight to Harvard Business School as first an MBA student and then as a professor there after a brief stint as an accountant with Price Waterhouse. After the United States entered the Second World War and began its extensive bombing campaign in the Pacific against Japan, he was recruited to work in the Office of Statistical Control for the United States Army Air Forces. Here he served under General Curtis LeMay and was tasked primarily to improve the effectiveness of B-29 bombers. One of the chief findings McNamara found, while serving under LeMay, was that bombing rates were so low because pilots were getting scared – given their own 70% casualty rates – and turning their planes around and coming up with excuses why they couldn’t finish their missions. McNamara reported his findings to LeMay and LeMay, dismayed, applied a drastic solution. He would fly in the first plane every mission and the crew of any plane that turned around midflight would be court martialed. Bombing rates substantially improved overnight. McNamara’s fourth lesson is to maximize efficiency.

After the war was won, McNamara and nine of his fellow officers in the Office of Statistical Control – referred to as the “Whiz Kids” – were recruited to help turn around the Ford Motor Company. Through various financial, planning, and production analysis roles, McNamara worked his way up the corporate ladder. One of his most substantial achievements was the introduction of seatbelts, which he learned, through studies conducted at Cornell University, would dramatically reduce the death rate in car accidents. Hence, his sixth lesson: Get the data. Because of his tremendous success, he was eventually tapped to become the first person outside the Ford family to serve as the company’s president. However, shortly after attaining the highest seat at Ford, he was appointed to the cabinet of President Kennedy as Secretary of Defense.

It was in this role, as Secretary of Defense during the Kennedy and then Johnson Administrations, where his analytical background and technocratic modus operandi were not as effective or advantageous as in the past.

When it was discovered that the Soviet Union had moved missiles into Cuba and more were on their way, beginning the Cuban Missile Crisis, McNamara recommended to a befuddled President Kennedy to “First, develop a specific strike plan. Then consider the consequences.” That is, develop a detailed plan allocating resources over time and then make predictions of the consequences based upon statistical models. But it was Tommy Thompson, former Ambassador to the Soviet Union, who advised Kennedy to give Premier Khrushchev an honorable way out of the crisis. Because of time spent with Khrushchev as ambassador, Thompson understood Khrushchev’s psychology and particularly the nature of the political pressure that he was facing. His first lesson: Empathize with you enemy. It was Thompson, the man who understood the irrational forces facing a man leading a nation, that prevented what McNamara called “staring down the gun barrel of nuclear war.”

When the Vietnam War was fully underway, one of the ways in which McNamara and the Johnson Administration measured victory was through body count. That is, how many North Vietnamese people had died. It was reasoned that if we killed more people than our enemy had killed of us than we were winning. But this simple metric overlooks, however, the end goal of the North Vietnamese: to unite the country, under communist rule, at any cost. The North Vietnamese were prepared to fight to the death, to the last man standing. Therefore, our metric to measure if we were winning the war was misaligned with the objectives of war. Lesson eight: Be prepared to re-examine your reasoning.

***

Although this documentary is about a man of a past generation, it is highly relevant for our times. McNamara, with his elite education, raw intellectual horsepower, and rational faith in the triumphant power of analysis and calculation, embodies the term technocrat. He represents a belief that solutions to complex social and political problems can be systematically deduced if only the right variables are isolated or the right metrics are derived or enough statistics are collected. McNamara’s is a mindset that places undue emphasis on technology while overlooking the subtle nuances of human psychology. For instance, the Vietcong understood that if they could stage a dramatic bloody assault that was televised to the American public, despite their own heavy losses to American overwhelming technological superiority, public support for the war would completely collapse. They were right and McNamara was wrong. The technocrat is above all a believer in the ultimate power of quantitative: everything can be reduced to a number and can thus be manipulated like an algebraic equation.

In an essay on “Political Judgment”, the great philosopher Isaiah Berlin wrote “My argument is only that not everything, in practice, can be – indeed that a great deal cannot be – grasped by the sciences… Those who are scientifically trained often seem to hold Utopian political views precisely because of a belief that methods or models which work well in their particular fields will apply to the entire sphere of human action, or if not this particular method, some other model of a more or less similar kind.” This is not to say that those trained in science have bad political judgment or should not be involved in statesmanship. It means that there is a mindset – a very dangerous one – of many political decision makers that excludes those irrational human forces from rational decision-making. Nations don’t go to war just because of rational calculations of self-interest, such as oil or territory, Thucydides would remind us. They also go to war because of fear and honor, two irrational motives. Human beings do a lot of things irrationally – things that are, in fact, not in their best interest. Not flying because you’re afraid the plane will crash is an irrational fear, for instance. Or getting into a fight with someone from your school’s rival when he called you a derogatory name is the irrational feeling of honor that’s spurring you on.

The term the fog of war is derived from Carl von Clausewitz’s On War. In it, he explains “The general unreliability of all information presents a special problem in war: all action takes place, so to speak, in a kind of twilight, which, like fog or moonlight, often tends to make things seem grotesque and larger than they really are. Whatever is hidden from full view in this feeble light has to be guessed at by talent, or simply left to chance.”

It is instructive for all of us – no matter how well known our universities or pedigrees or credentials – to understand that there is indeed a fog that shrouds our comprehension of the world. In all complex human systems or events there are deep-seated, and more-often-than-not irrational, forces at play that we cannot possibly fathom. While technology, mathematical formulas, and deductive reasoning are useful tools in making decisions to solve our monumental world problems, they are not ends in themselves. Only when they are coupled with a humble understanding of the qualitative and irrational forces of human action can problems be holistically and realistically examined in their fullest light. As McNamara derives in his second lesson: Rationality will not save us.

Comments on this entry are closed.